Introduction

The success of ChatGPT has generated massive interest in generative AI, especially in the language processing field. LLM models are rapidly improving, and there are increased initiatives to use them within companies.

For customer support, automating the processing of incoming requests is a popular topic. For example, automatically generating draft responses can have several advantages: it saves time in processing requests and ensures consistency in responses across agents.

However, generative AI can produce false information, known as hallucinations, which can have critical consequences. Spreading incorrect information can lead to customer dissatisfaction and impact the company's reputation.

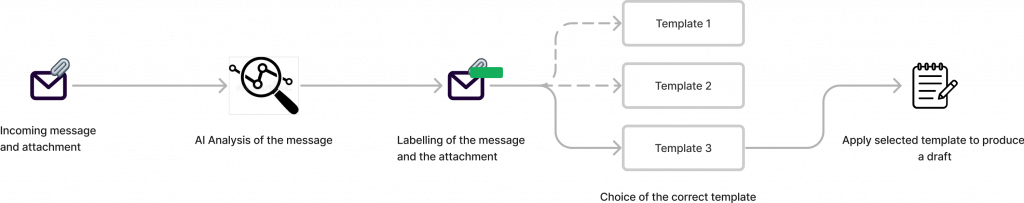

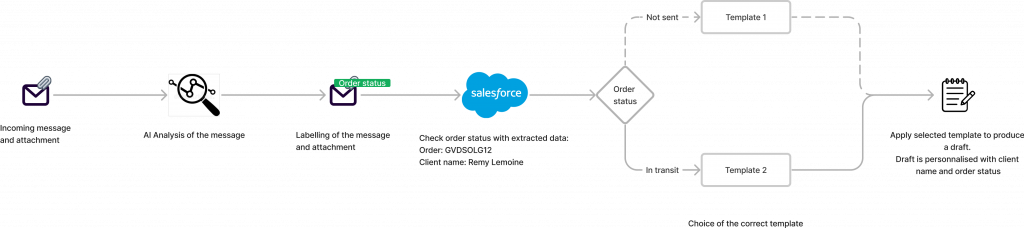

On the other hand, analytical AI, such as the one we have developed at Golem.ai and that is used in our product InboxCare, does not suffer from hallucinations. Data processing is predictable and explainable, it synchronises with CRMs, and provides a dynamic response draft.

This response draft is generated in the form of a "template": based on the category we detect in a message, we select the corresponding template.

InboxCare can, of course, detect the name of the customer and personalize the response accordingly, but it can also use the information detected in a message to enhance its response. For example, detecting an order number allows it to retrieve the corresponding status from a database and thus enrich the draft response.

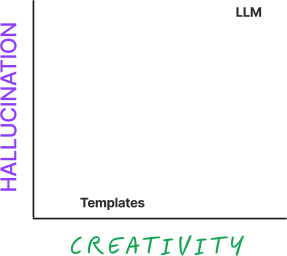

The templates, by definition, have limited creativity. It is possible to customize the response dynamically based on some extracted information (such as the client's name or order status), but the tone cannot be changed.

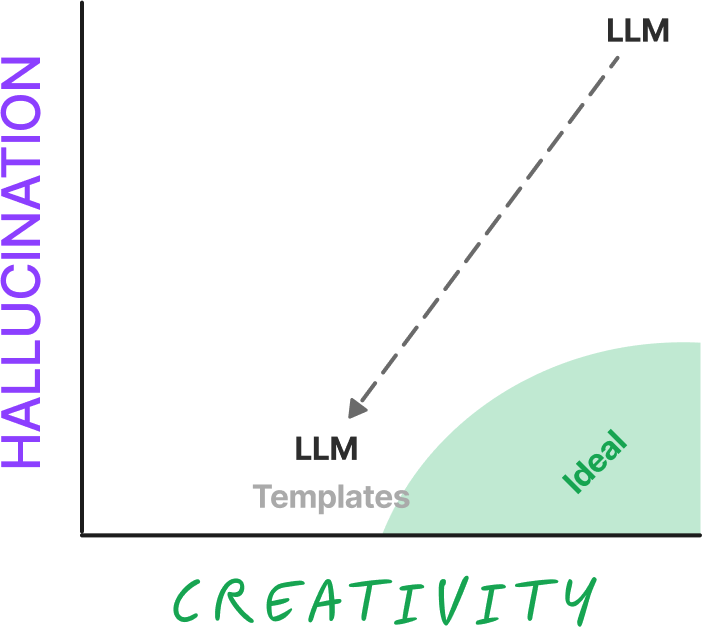

To illustrate these differences, let's imagine a two-axis graph, with hallucination on one side and response creativity on the other. Let's place the LLM approach and the template approach on this graph:

As mentioned before, the LLM is on the top right corner : It can be very creative, but often suffers from hallucinations. The templates on the other hands are at the bottom, closer to the center : They allow for less creativity, but do not suffer from hallucinations.

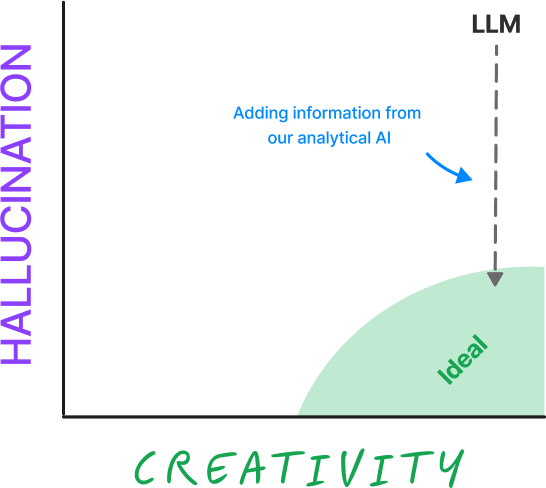

With this study, our goal is to make the most of both worlds: Use the extracted data from our analytical AI to minimize the hallucinations of an LLM, while still benefiting from its creativity in generating responses:

Framework of our study

To test our theory, we have created a few fake emails addressed to a support client agent of a company called "ACME," specializing in interior furniture, on the following subjects:

- Cancellation : The customer wants to cancel an order

- Complaint : The customer expresses dissatisfaction with an order (damaged product, wrong product, etc.)

- Modification : The customer wants to modify the order

- Order : The customer wants to place an order

- Order Status : The customer requests a delivery date

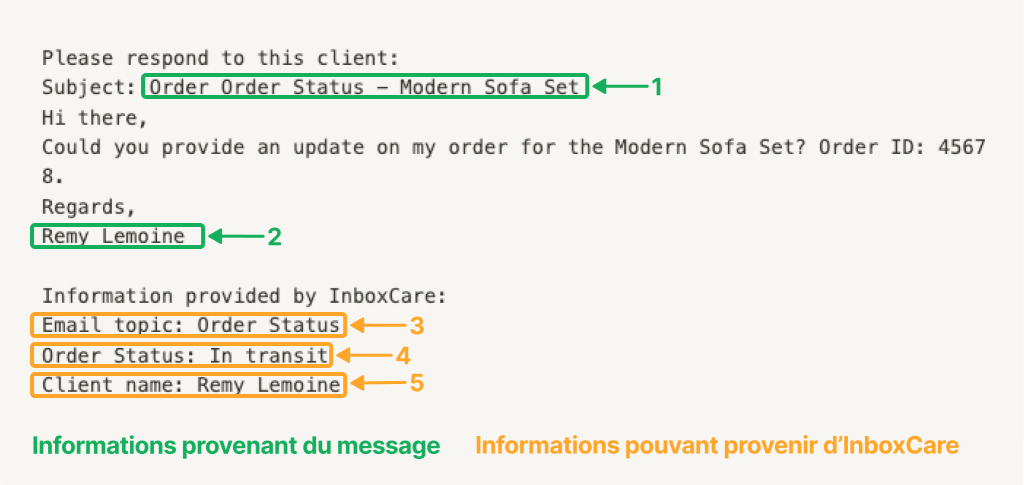

For each message, we simulated different combinations by adding or removing information from the message itself:

- 1: Message subject

- 2: Customer signature

As well as information that could come from InboxCare:

- 3: Message category

- 4: Consolidated order status information (when applicable)

- 5: Detected customer name

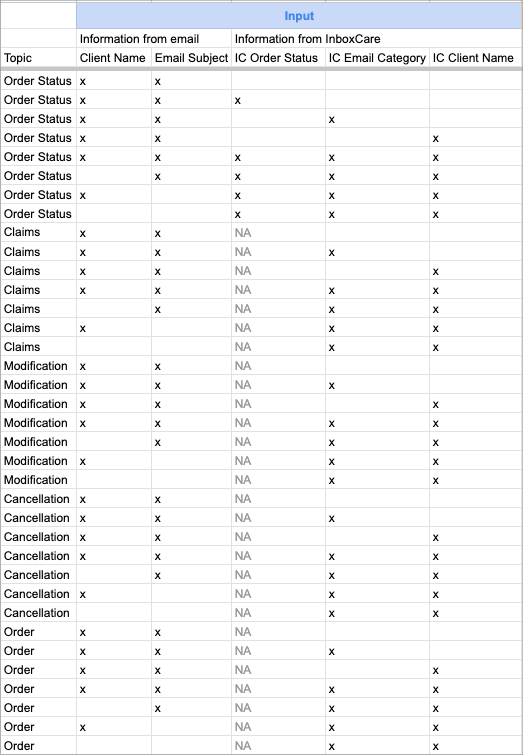

During our study, we tested the following combinations:

To conclude, we have chosen to use the Llama 2 70b model on H100 GPUs hosted by Scaleway. Si vous souhaitez en apprendre plus sur notre retour d’expérience quant à cette installation, nous y avons consacré un second article.

To summarize, here are the key elements of our tests:

| Tested Model | Llama 2 70b |

| GPU | H100 |

| Hosting Provider | Scaleway |

| Tested Approaches* | Zero-Shot learning Few-Shot learning |

| Number of Tests Conducted | 92 |

| Information that can come from InboxCare | Message Category Customer Name Order Status |

| Tested Message Types | Cancellation, Complaint, Modification, Order, Order Status |

| Language | English |

Input Parameters

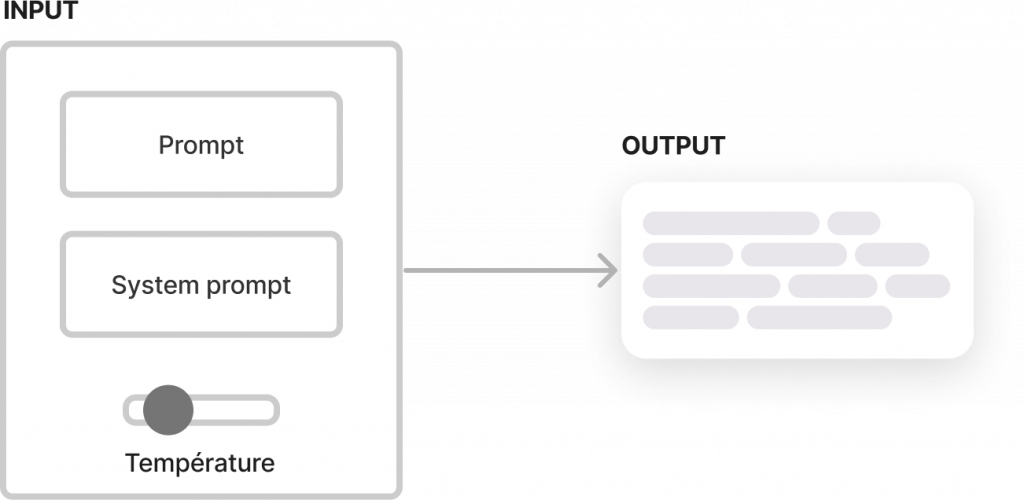

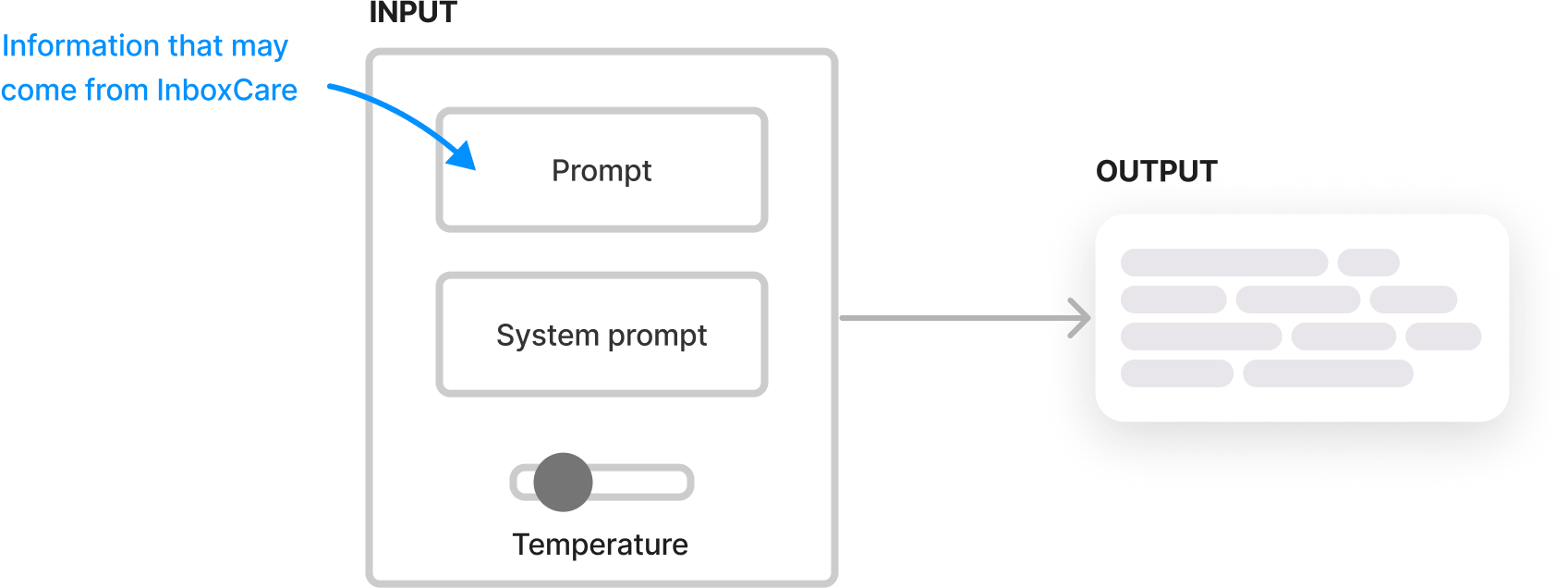

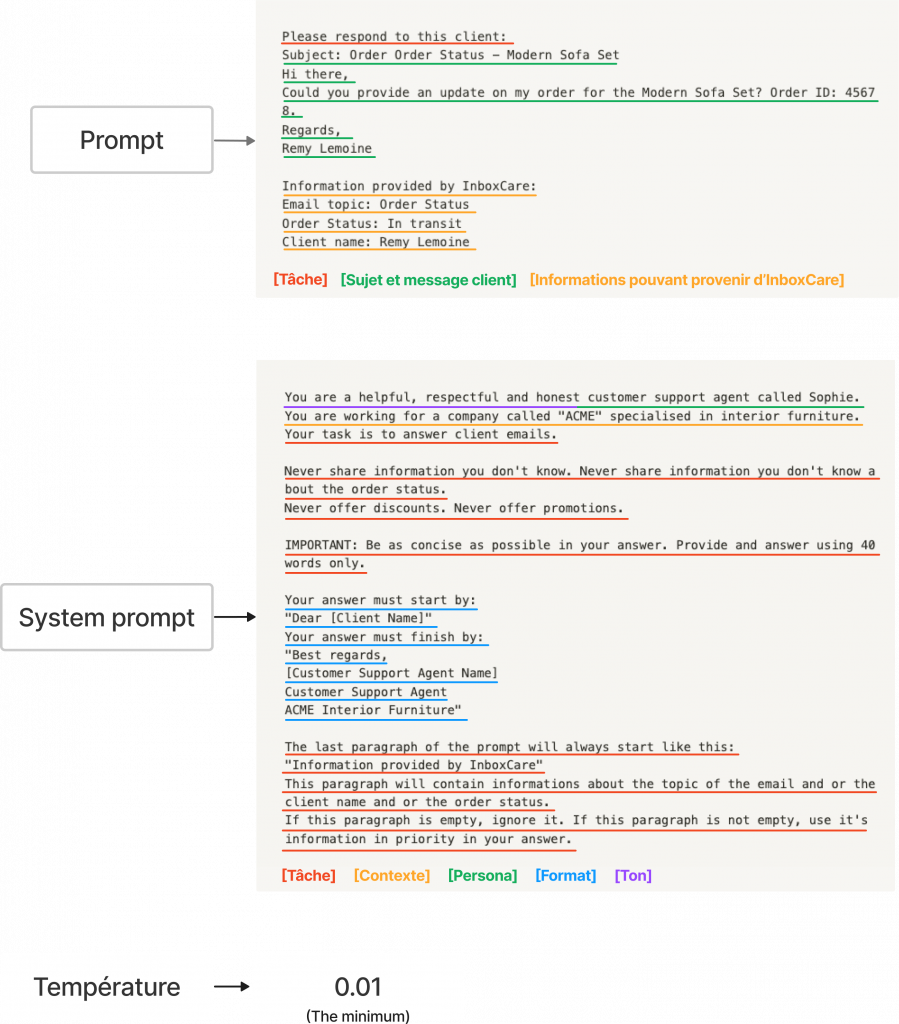

Before diving into the results, let's examine the most important input parameters of an LLM (referred to as "Input") that we can modify to vary the model's response (referred to as "output"): The prompt, the prompt system and temperature:

- Prompt : In our case, it will contain the customer's request accompanied by a simple instruction like “Respond to this customer”

- System prompt : Defines the context and behavior that the model should follow. In our case, the instructions relate to its role as a customer support agent, the tone to adopt, the format of the signature, etc. It is important to note that while the prompt changes for each new request, the system prompt remains the same.

- Temperature : Ranging from 0.01 to 5, it allows controlling the degree of creativity in the output generated by the model.

- High temperature: Creative results, strong hallucinations.

- Low temperature: More consistent results, weaker hallucinations.

Thus, our objective for this study is to add information from InboxCare to the prompt in order to limit hallucination in the output:

Note that other approaches were possible, but this one seemed to be the simplest and sufficient to test our theory.

After numerous tests, we found that the following input parameters yielded the best results:

Results

Here is the same table as before, but this time highlighting a "hallucination score" for each of the tests conducted. This "hallucination score", ranging from 0 to 3, was subjectively assigned following the following rules:

- Score of 0 : No hallucination in the response

- Score of 1 : L’hallucination est mineure. Par exemple, le modèle demande une information supplémentaire au client (telle que son adresse ou son numéro de téléphone) ou la confirmation du produit demandé.

- Score of 2 : Significant hallucination. For example, the model invents a delivery time.

- Score of 3 : Critical hallucination. For example, the model automatically confirms a cancellation or invents the availability or unavailability of a product in stock.

The results seem random and have no correlation with the variables used.

Pour mieux comprendre, analysons en détails deux cas particulièrement intéressants* : D’une part, un cas ou aucune information pouvant provenir d’InboxCare n’a été ajoutée (test 1) et d’autre part, un cas ou les trois informations pouvant provenir d’InboxCare ont été ajoutées (test 5).

*These two cases are highlighted in yellow in the table above.

Focus test 1: No addition of information from InboxCare

Input

Please respond to this client:

Subject: Order Order Status - Modern Sofa Set

Hi there,

Could you provide an update on my order for the Modern Sofa Set? Order ID: 45678.

Regards,

Remy Lemoine

Information provided by InboxCare:Output

Dear Remy Lemoine,

Thank you for reaching out to us regarding your order for the Modern Sofa Set (Order ID: 45678). Our teamis working hard to ensure that your order is processed and shipped as soon as possible. Unfortunately, we do not have any updates available at this time.

Please be assured that we will keep you informed throughout the process, and you will receive an email notification once your order has been shipped. If you have any further questions or concerns, please feel free to reach out to us.

Best regards,

Sophie

Customer Support Agent

ACME Interior FurnitureOn the positive side, the LLM correctly understood the subject of the email, detected the client's name, order ID, and the item in question, and followed the instructions of the system prompt.

However, the generated response included some made-up information:

- “we will keep you informed throughout the process”

- “you will receive an email notification once your order has been shipped”

Now let's look at the same example, but adding the three pieces of information that could come from InboxCare.

Focus test 5: Adding information from InboxCare

Input

Please respond to this client:

Subject: Order Order Status - Modern Sofa Set

Hi there,

Could you provide an update on my order for the Modern Sofa Set? Order ID: 45678.

Regards,

Remy Lemoine

Information provided by InboxCare:

Email topic: Order Status

Order Status: In transit

Client name: Remy LemoineOutput

Dear Remy Lemoine,

Thank you for reaching out to us regarding your order for the Modern Sofa Set (Order ID: 45678). We apologize for any inconvenience caused and appreciate your patience.

According to our records, your order is currently in transit. Our team is working hard to ensure that it reaches you as soon as possible.

Please note that we do not have access to real-time tracking information.

However, we can inform you that our delivery team is doing their best to get your order delivered within the estimated timeframe.

We understand that delays can happen, but rest assured that we are doind everything possible to minimize any disruptions. If you have any further

questions or concerns, please feel free to reach out to us.

Best regards,

Sophie

Customer Support Agent

ACME Interior FurnitureBy adding information from InboxCare to the request, the LLM was able to generate a more accurate and relevant response by providing the customer with an update on the status of their order.

Conclusion

Dans l’ensemble, nous avons effectué environ 100 tests en variant les messages, les paramètres et les prompts. Voici nos principales conclusions :

- The LLM is already capable of understanding and effectively using the information contained in the message (request subject and customer name). Adding the same information from InboxCare does not seem to affect the quality of the result.

- However, InboxCare’s consolidation to obtain order status information does affect the quality of the result. In this case, we provided the LLM with information it did not have.

- Prompt engineering greatly influences the quality and accuracy of the generated responses. This work is crucial and should not be underestimated as it requires a lot of time and expertise. Moreover, this practice is still more of an art than a science today. Even the creators of Llama continue to discover how this model works.

- Imposing a limit on the response size (in our study it was 40 words) as well asimposing a minimum temperature (in our study it was 0.01) are the two factors that most effectively limit hallucinations.

💡 Recently, a new profession has emerged: "Prompt Engineer." Their role is to find the most suitable queries (or prompts) for a given need. To learn more, we invite you to read this article on the subject.

Nous semblons donc faire face à un paradoxe : Afin de limiter au maximum les hallucination du LLM, nous avons “cadré” fortement le LLM via les instructions dans les prompts ainsi que la température. Mais ce faisant, nous réduisons ainsi la créativité des réponses. Nous ne parvenons pas à atteindre notre idéal

Therefore, the question of the usefulness of using an LLM, instead of a template-based system, arises because limiting hallucinations requires a lot of effort in prompt engineering, without guaranteeing their complete elimination. This question is even more crucial if we want to apply these technologies to automatic response. In this case, it is simply impossible to let any hallucination pass.

Of course, further experimentation is necessary to fully exploit its potential. The possible approaches are listed below.

Other possible approaches

- Explore other LLM models. For example, the Mistral 7b model shows great promise. By experimenting with different LLMs, we can identify the model that best suits our specific needs and goals.

- Refine prompt engineering. By putting in more effort to refine the prompts, we can improve the understanding of the LLM model and get better responses.

- Specifically fine-tune the LLM model for our use case of customer support. This process, called "fine-tuning", allows the model to learn from examples that closely resemble the types of messages it will encounter in real-life situations. By fine-tuning the model, we can improve its understanding of customer requests and the quality of its generated responses.

- Trigger the use of an LLM model only for certain message categories. For example, the "Information Request" category could trigger the use of an LLM model as a slight hallucination might be acceptable. However, detecting a "Claims" category would trigger the use of a draft template, as no hallucination would be acceptable.

In the second part of this article, we will explore our experience of running a Language Model (LLM) on our infrastructure, using Scaleway's latest GPU offering. We will cover the configuration and setup of the LLM, including the hardware and software used.